Q: How HadoopDrive is connecting to Hadoop cluster

HadoopDrive uses WebHDFS REST API. Make sure your Hadoop administrator enabled WebHDFS. Default namenode ports are http://hostname:50070 for non SSL, and https://hostname:50470 for SSL communication (unless your administrator set different ones) – see more details about the default HDFS ports on Cloudera blog.

Q: I can browse hdfs structure, but I cannot perform any file operations (CREATE, OPEN, etc.)

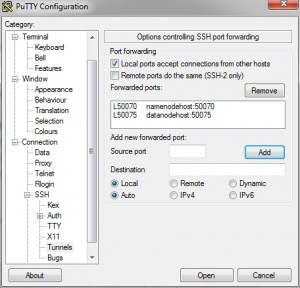

This is because HDFS file structure is stored in namenode. Namenode uses “302 Location” header to redirect applications to different host (datanode) in case of any specific file operations (CREATE, OPEN etc). Sometimes your administrator (due to security reasons) could disable HTTP access to datanodes. To solve such issue you have two options:

In case of second option you need also to select “redirect 300,302..307 to localhost” in HadoopDrive setting window. Now, all host names got in redirection response from WebHDFS will be replaced by 127.0.0.1, request will be executed on localhost and send to datanode via ssh tunnel.

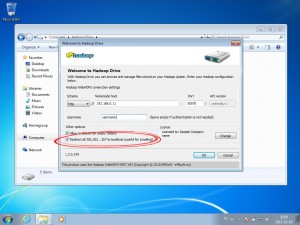

Q: How to configure HadoopDrive/ how to change settings?

When you enter to HadoopDrive for the first time, settings window will be showed automatically. Mandatory fields are namenode hostname (or IP) and port. To change settings in the future you can always click on “HadoopDrive Settings” in the Toolbar when you are browsing files via HadoopDrive.

Note that current HadoopDrive version (1.3.0) supports only one security model : “Authentication when security is off”. It means that user.name=USERNAME will be added to each single HTTP request (Hadoop Authentication). If you’d like to use HadoopDrive, but you use different authentication method, please contact with us to add such feature (bigdotsoftware@bigdotsoftware.pl)

Q: What file operations are available in HadoopDrive

HadoopDrive supports CREATE, OPEN, MKDIRS, RENAME, DELETE, GETFILESTATUS, LISTSTATUS. If you need more operations to be supported – let us know , we are happy to add new features to HadoopDrive (bigdotsoftware@bigdotsoftware.pl)

Q: Do I need license?

Depends from you. Licenses are cheap and they have a form of project donation (licenses start from $5). Not licensed version is still fully functional, but it has brandings enabled (HadoopDrive shows 2 virtual (fake) files in the root folder: README.txt and README.jpg). Those files are virtual ones, and they are not stored nor copied to your Hadoop cluster. We are more than happy with each donation, because thanks to them we can maintain and develop our product. So, If you like HadoopDrive, please consider license purchase for each workstation or project donation.

Q: How to apply license key

Open configuration window and click ‘Register’ button. A window with steps will appear. Please follow those steps to license your HadoopDrive.

Q: What is a namenode/datanode

Please find below answers from Apache Hadoop website

HDFS has a master/slave architecture. An HDFS cluster consists of a single NameNode, a master server that manages the file system namespace and regulates access to files by clients. In addition, there are a number of DataNodes, usually one per node in the cluster, which manage storage attached to the nodes that they run on. HDFS exposes a file system namespace and allows user data to be stored in files. Internally, a file is split into one or more blocks and these blocks are stored in a set of DataNodes. The NameNode executes file system namespace operations like opening, closing, and renaming files and directories. It also determines the mapping of blocks to DataNodes. The DataNodes are responsible for serving read and write requests from the file system’s clients. The DataNodes also perform block creation, deletion, and replication upon instruction from the NameNode.

The NameNode and DataNode are pieces of software designed to run on commodity machines. These machines typically run a GNU/Linux operating system (OS). HDFS is built using the Java language; any machine that supports Java can run the NameNode or the DataNode software. Usage of the highly portable Java language means that HDFS can be deployed on a wide range of machines. A typical deployment has a dedicated machine that runs only the NameNode software. Each of the other machines in the cluster runs one instance of the DataNode software. The architecture does not preclude running multiple DataNodes on the same machine but in a real deployment that is rarely the case.

The existence of a single NameNode in a cluster greatly simplifies the architecture of the system. The NameNode is the arbitrator and repository for all HDFS metadata. The system is designed in such a way that user data never flows through the NameNode.

Q: How to install HadoopDrive

Installation in three steps:

- Download latest HaddopDrive from here

- Launch installer and click “Next”

- Enjoy 🙂